Gradually, then suddenly: AI is now better than all but the best human programmers

o1 is GOLD-medal level at IOI (the “Olympics of programming”)

“o1 is showing [programming] skills that probably less than 10,000 humans on earth currently have, and it’s only going to get better.”

Soon, AIs will blow past mere humans, outputting billions of lines of code — and we will have no idea what they’re doing.

At first, we’ll check many of their outputs, then fewer outputs, but eventually there will be too much to keep up.

And at that point they will control the future, and we will just hope this new vastly smarter alien species stay our faithful servants forever.

I think the majority of people are still sleeping on this figure.

Codeforces problems are some of the hardest technical challenges human beings like to invent.

Most of the problems are about algorithms, math and data structures and are simplifications of real life problems in engineering and science.

o1 is showing skills that probably less than 10,000 humans on earth currently have, and it’s only going to get better.

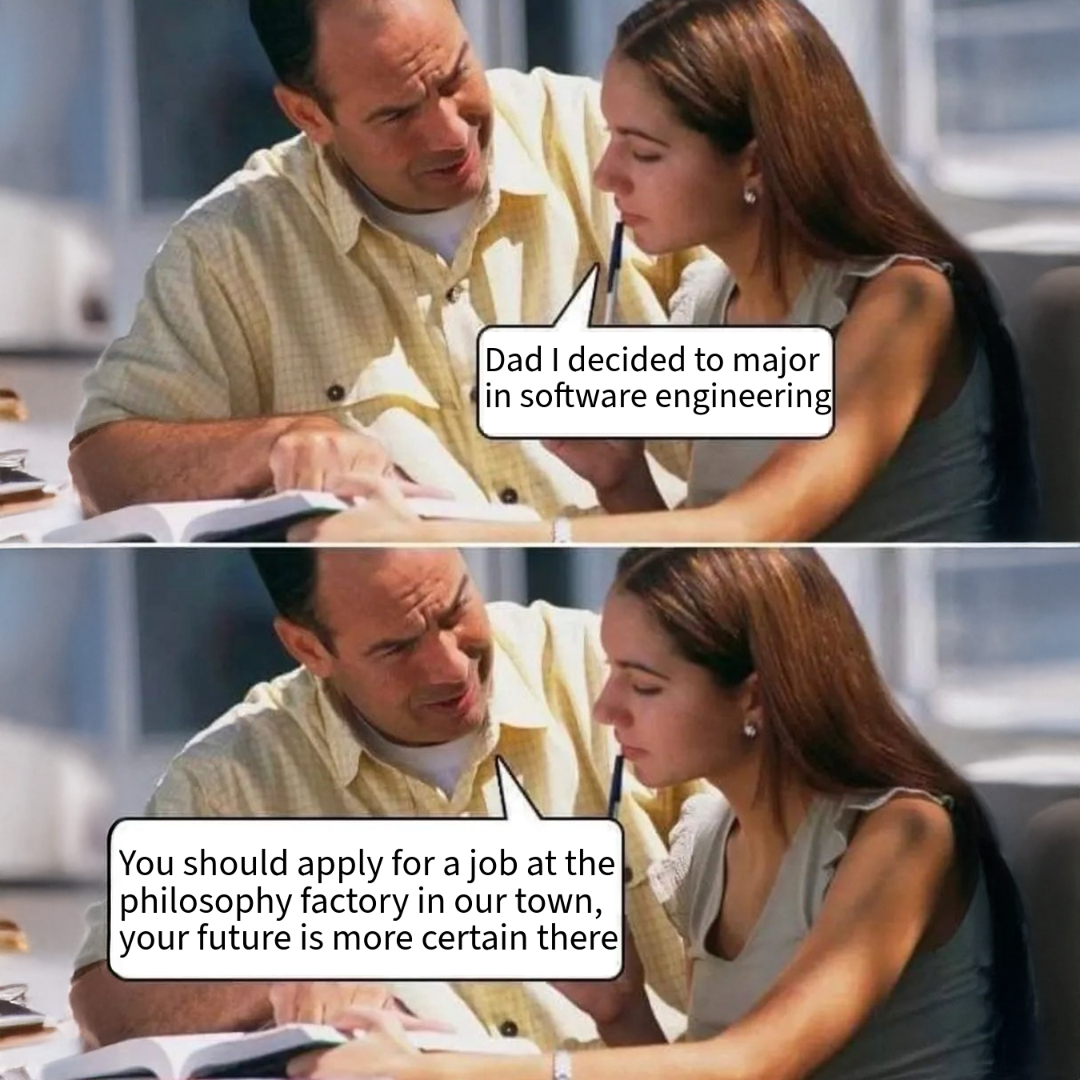

This knowledge translates very well into software engineering, which is in many cases a bottleneck for improving other sciences.

It will change the fabric of society with second order effects,

probably in the next 5 years, while humans still adapt and create tools that use these models. However, the rate of improvement is greater than the rate at what we build, thus so many people today don’t know what to build with o1.

Looking at the things people have built in the past 3 years, it makes me realize most tools become less useful than the newest model is.

I believe that engineers should start preparing for a post agi world soon. Specially those that work in theoretical sciences and engineering.

Things are gonna get weird!