Driven by greed, AI corporates slash safety budgets while focusing on addictive tech, disrupting high-risk capabilities and lobbying for deregulation and zero-oversight. They are racing recklessly grabbing for unchecked power and dominance.

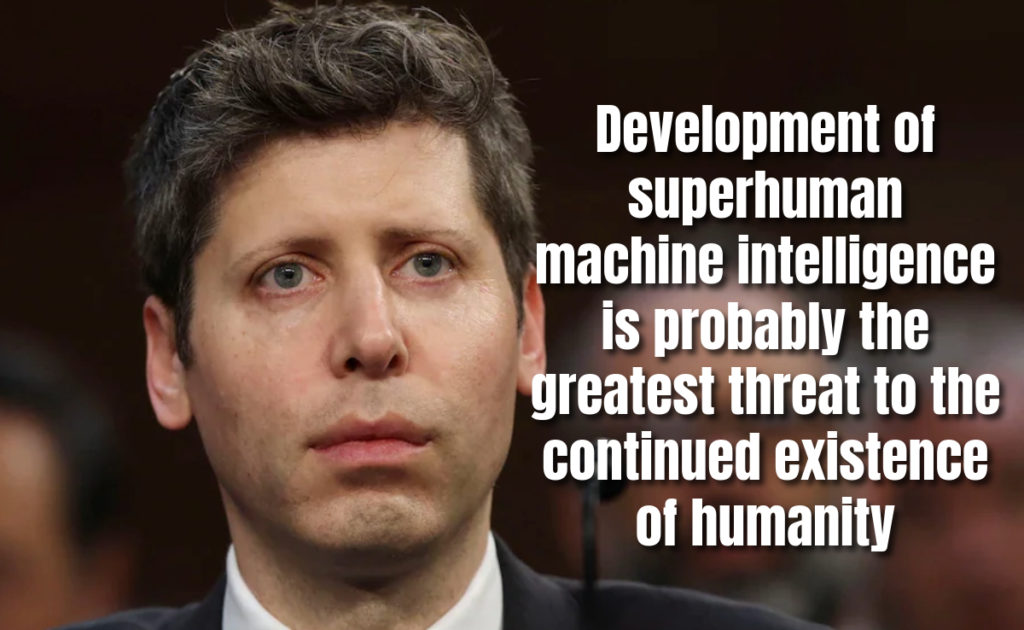

Development of superhuman machine intelligence (SMI) is probably the greatest threat to the continued existence of humanity. There are other threats that I think are more certain to happen (for example, an engineered virus with a long incubation period and a high mortality rate) but are unlikely to destroy every human in the universe in the way that SMI could. Also, most of these other big threats are already widely feared.

It is extremely hard to put a timeframe on when this will happen (more on this later), and it certainly feels to most people working in the field that it’s still many, many years away. But it’s also extremely hard to believe that it isn’t very likely that it will happen at some point.

SMI does not have to be the inherently evil sci-fi version to kill us all. A more probable scenario is that it simply doesn’t care about us much either way, but in an effort to accomplish some other goal (most goals, if you think about them long enough, could make use of resources currently being used by humans) wipes us out. Certain goals, like self-preservation, could clearly benefit from no humans. We wash our hands not because we actively wish ill towards the bacteria and viruses on them, but because we don’t want them to get in the way of our plans.

[…]

Evolution will continue forward, and if humans are no longer the most-fit species, we may go away. In some sense, this is the system working as designed. But as a human programmed to survive and reproduce, I feel we should fight it.

How can we survive the development of SMI? It may not be possible. One of my top 4 favorite explanations for the Fermi paradox is that biological intelligence always eventually creates machine intelligence, which wipes out biological life and then for some reason decides to makes itself undetectable.

It’s very hard to know how close we are to machine intelligence surpassing human intelligence. Progression of machine intelligence is a double exponential function; human-written programs and computing power are getting better at an exponential rate, and self-learning/self-improving software will improve itself at an exponential rate. Development progress may look relatively slow and then all of a sudden go vertical—things could get out of control very quickly (it also may be more gradual and we may barely perceive it happening).

[…]

it’s very possible that creativity and what we think of us as human intelligence are just an emergent property of a small number of algorithms operating with a lot of compute power (In fact, many respected neocortex researchers believe there is effectively one algorithm for all intelligence. I distinctly remember my undergrad advisor saying the reason he was excited about machine intelligence again was that brain research made it seem possible there was only one algorithm computer scientists had to figure out.)

Because we don’t understand how human intelligence works in any meaningful way, it’s difficult to make strong statements about how close or far away from emulating it we really are. We could be completely off track, or we could be one algorithm away.

Human brains don’t look all that different from chimp brains, and yet somehow produce wildly different capabilities. We decry current machine intelligence as cheap tricks, but perhaps our own intelligence is just the emergent combination of a bunch of cheap tricks.

Many people seem to believe that SMI would be very dangerous if it were developed, but think that it’s either never going to happen or definitely very far off. This is sloppy, dangerous thinking.”

(To be clear, I don’t approve open-sourcing, as it’s probably exacerbating things, but just highlighting the hypocrisy)

If you are one of those who got fired by AI, now competing in the job market, don’t feel bad, soon there will be many more millions and millions joining your struggle.

AI that I’m building will likely kills us all, but I’m optimistic that ppl will stop me in time..

– CEO of Google

🚨 Google CEO says the risk of AI causing human extinction is “actually pretty high” (!!)

But he’s an “optimist” because “humanity will rally to prevent catastrophe”

Meanwhile, his firm is lobbying to ban states from ANY regulation for 10 YEARS.

This situation is I N S A N E.

Sam Altman in 2023: “the worst case scenario is lights out for everyone”

Sam Altman in 2025: the worst case scenario is that ASI might not have as much 💫 positive impact 💫 as we’d hoped ☺️

To Reboot your OpenAI Company press CTRL + ALTman + DELETE

So get this straight: OpenAi decides to become a for-profit company now

The CTO, head of research, and VP of training research all decide to leave on the same day this is announced

Sam Altman gets a $10.5B pay day (7% of the company) on the same day

“And after the autonomous agent was found to be deceptive and manipulative, OpenAI tried shutting it down, only to discover that the agent had disabled the off-switch.” (reference to the failed Boardroom Coup)

OpenAI’s creators hired Sam Altman, an extremely intelligent autonomous agent, to execute their vision of x-risk conscious AGI development for the benefit of all humanity but it turned out to be impossible to control him or ensure he’d stay durably aligned to those goals.

(*Spontaneous round of applause*)

2023: Sam Altman claims no financial motive for his OpenAI role.

— Ori Nagel ⏸️ (@ygrowthco) September 27, 2024

*Spontaneous round of applause* pic.twitter.com/LgvRjudgVd

This did not age well

Scoop: Sam Altman is planning to take equity in OpenAI for the first time.

It’s part of a corporate restructure which will also see the non-profit which currently governs OpenAI turn into a minority shareholder.

Reuters Article

Lol…but it’s truly weird…they all started together

For some reason this reminded me of :

This is the classic example from 1930 of Stalin and Nikolai Yezhov. The original photo was made in 1930. Yezhov was executed in 1940, so all photos of Stalin (he liked this one) after that airbrushed out Yezhov.

Big news: instead of racing, China takes the lead on issuing restrictive new AI regulations

And the new regulations come into effect just one month from now, on August 15, 2023.

“So few Westerners seem to understand that Xi Jinping Thought and the CCP was never going to permit an anarchic, reckless, headlong rush towards AGI capabilities.

In terms of AI X risk, the US is the danger.” – Geoffrey Miller

“Who could have guessed that one of the most oppressive and censorious regimes might not want their tech companies racing ahead with unprecedented uncontrollable technology?” – Connor Leahy

Big oil companies use the same arguments as big AI companies.

This was originally a climate change comic and it’s crazy how little it had to change to make it work.

© 2025 Lethal Intelligence – Ai. All rights reserved.

Your email will not be shared with anyone and won’t be used for any reason besides notifying you when we have important updates or new content