What happened the last time a smarter species arrived?

To the animals, we devoured their planet for no reason.

Earth was paperclipped…by us. To them, WE were Paperclip Maximizers.

Our goals were beyond their understanding

Here’s a crazy stat: 96% of mammal biomass became 1) our food, or 2) our slaves. We literally grow them just to eat them, because we’re smarter, and we like how they taste.

We also geoengineered the planet. We cut down forests, poisoned rivers, and polluted the air.

Imagine telling a dumber species that you destroyed their habitat for “money”. They’d say “what the hell is money?”

AGIs may have goals that seem just as stupid to us (“why would an AGI destroy us to make paperclips??”)

“But once AIs are smart enough, they’ll magically become super moral, and they won’t harm us like we harmed the animals”

Maybe! But as humans got smarter, over the last 10,000 years, we didn’t stop expanding – we mostly just colonized more and more of the planet.

Insect populations collapsed 41% this decade alone, yet we don’t care.

Sit with that for a minute. Imagine if nearly half of the people on Earth suddenly died!

That’s what the insects are going through right now, due to us. What if we’re the insects next?

“But some mammals survived!”

Yes, some. Most of them are in cages, waiting to be slaughtered and devoured.

If you were a nonhuman animal, you likely:

1) Went extinct, or

2) Were eaten (e.g. billions of pigs, chickens on factory farms)

3) Became enslaved (e.g. draft animals)

However, a few of the 8 million species got “lucky” and became… pets.

“The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else.” – @ESYudkowsky

“The humans do not hate the other 8 million species, nor do they love them, but their habitats are made out of atoms which humans can use for something else.”

Or as OpenAI Chief Scientist Ilya Sutskever said:

“[After AGI] It’s pretty likely that the entire surface of the Earth will be covered with solar panels and data centers.”

“A good analogy would be the way humans treat animals – when the time comes to build a highway between two cities, we are not asking the animals for permission.”

“I would not underestimate the difficulty of alignment of [AI systems] that are actually smarter than us.”

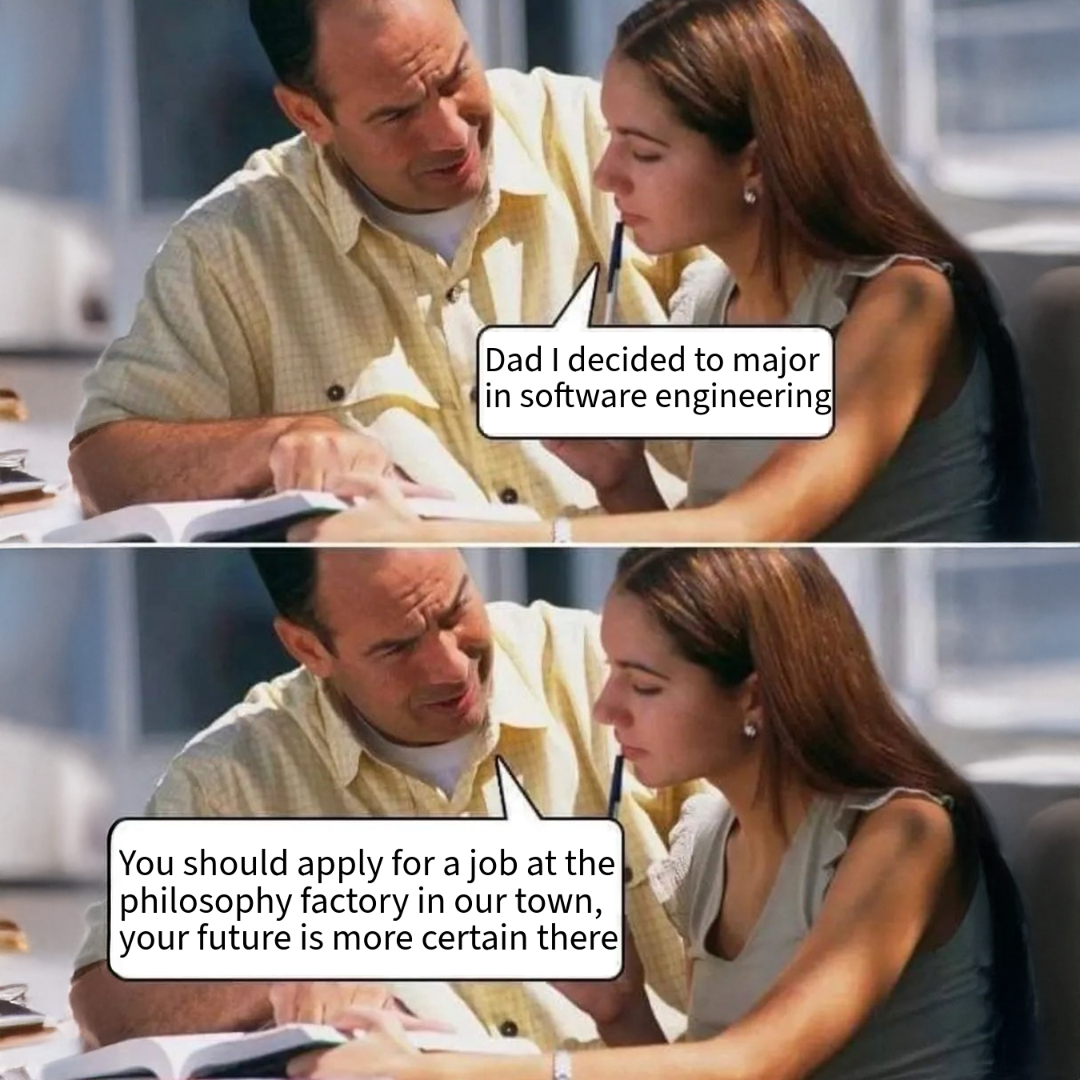

Sam Altman: “We will be the first species ever to design our own descendants”

“If two different species both want the same thing and only one can have it – to be the dominant species on the planet and beyond – they are going to have conflict.”

“We are in the process of seeing a new species grow up around us.” – Mustafa Suleyman, founder of Google DeepMind and CEO of Microsoft AI

Will the next superintelligent species cause the 7th Mass Extinction? I don’t know, but we are playing with fire.